F14: Collision Avoidance Car

Contents

Collision Avoidance Car

PICTURE HERE

Abstract

The inspiration behind the Collision Avoidance Car project comes from the state-of-the-art field of self-driving cars. All major automotive companies are investing heavily in autonomous car technology. One of the more prominent autonomous cars being developed is the Google Car, which features Lidar and photo-imaging technology to implement autonomy. The goal of the self-driving car is to reduce gridlock, eliminate traffic fatalities, and most importantly, to eliminate the monotony of driving. This project will explore the fundamentals of Lidar, and how this technology is being used for cutting-edge products, such as the Google Car.

Objective & Scope

The project objective was to implement Lidar(laser-based device for measuring distance) technology, coupled with a toy car, to autonomously detect and avoid obstacles. The car operates in two modes: automatic and manual. In the automatic mode, the car maneuvers around autonomously, avoiding obstacles to the front, rear, and sides. Whenever an obstacle is detected, the car maneuvers in the opposite direction, as long as that direction is also free of obstacles. In the manual mode, the car's movements are controlled via a Bluetooth connection. In addition to direction controls, a user is able to adjust the speed of the car and to adjust the distance at which obstacles are avoided.

Team Members & Roles

Eduardo Espericueta - Lidar Unit Integration

Sanjay Maharaj - Motor Integration & System Wiring

George Sebastian - Software Infrastructure (tasks / movement logic) & Bluetooth Integration & Lidar

Introduction

Design Implementation

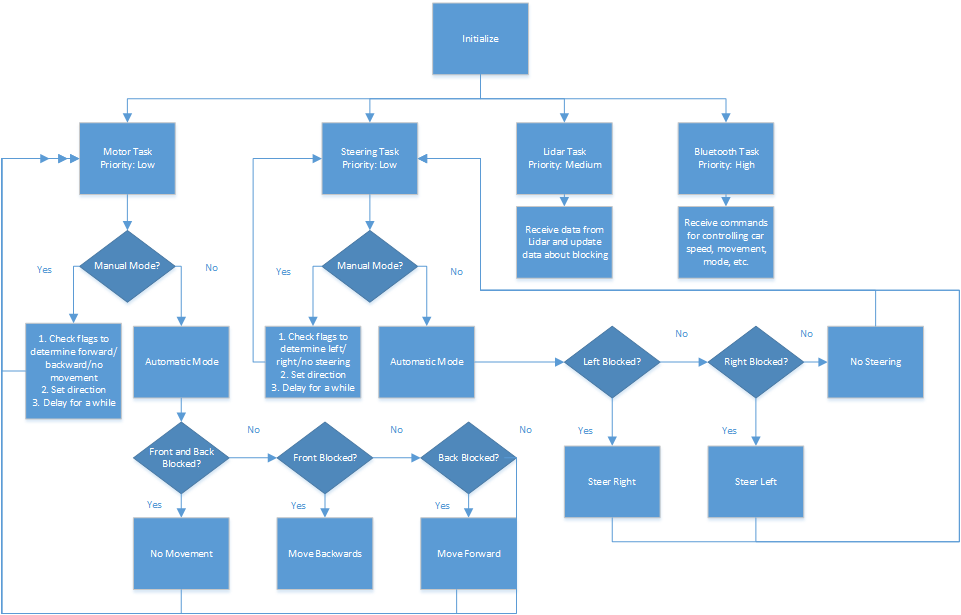

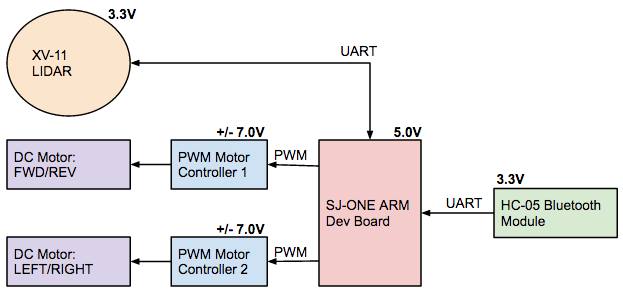

The state machine above shows a high level working of the autonomous car. The car's software infrastructure is made up of four tasks: the motor task, the steering task, the Lidar task, and the Bluetooth task.

The Bluetooth task is in charge of receiving commands from a computer or a phone to control the autonomous car. There are many Bluetooth commands, including:

- Switch between manual and automatic driving mode.

- Steering and movement (for use in manual mode).

- Movement speed adjustments (faster or slower).

- Steering speed adjustments (faster or slower).

- Change threshold values used in logic for dynamic calibration.

The Lidar task continuously runs and updates a global array with information about obstacles at every degree. The movement task will move the car forward or backward based on flags set by the Bluetooth task while in manual mode. The steering task behaves similarly when in manual mode except it moves the car left and right. In automatic mode, both cars will compare threshold values with the global array the Lidar task populated to make its decision to move.

System Integration

Verification

Technical Challenges

- Lidar needs to spin at a certain rate for reliable data.

- Lidar values can be corrupted and those values should be discarded in software.

Future Enhancements

Currently the device only uses some of the available Lidar data when driving autonomously. Much more complex driving patterns and obstacle avoidance could be possible if more of the data points are used. This could potentially eliminate blind spots the car might have. Further enhancements could come in the form of:

- GPS support so the car can drive autonomously.

- Some form of memory so the car can remember obstacles and form some kind of intelligent routing mechanism.